Lossy Compression for Integrating Event Cameras

My early work in this space was published in the proceedings of the IEEE Data Compression Conference 2021 and I presented my paper remotely in March. This conference is among the most prestigious conferences for data compression, and I am encouraged by the interest shown in our sensing model.

The full work is available on IEEE Xplore. The abstract is below.

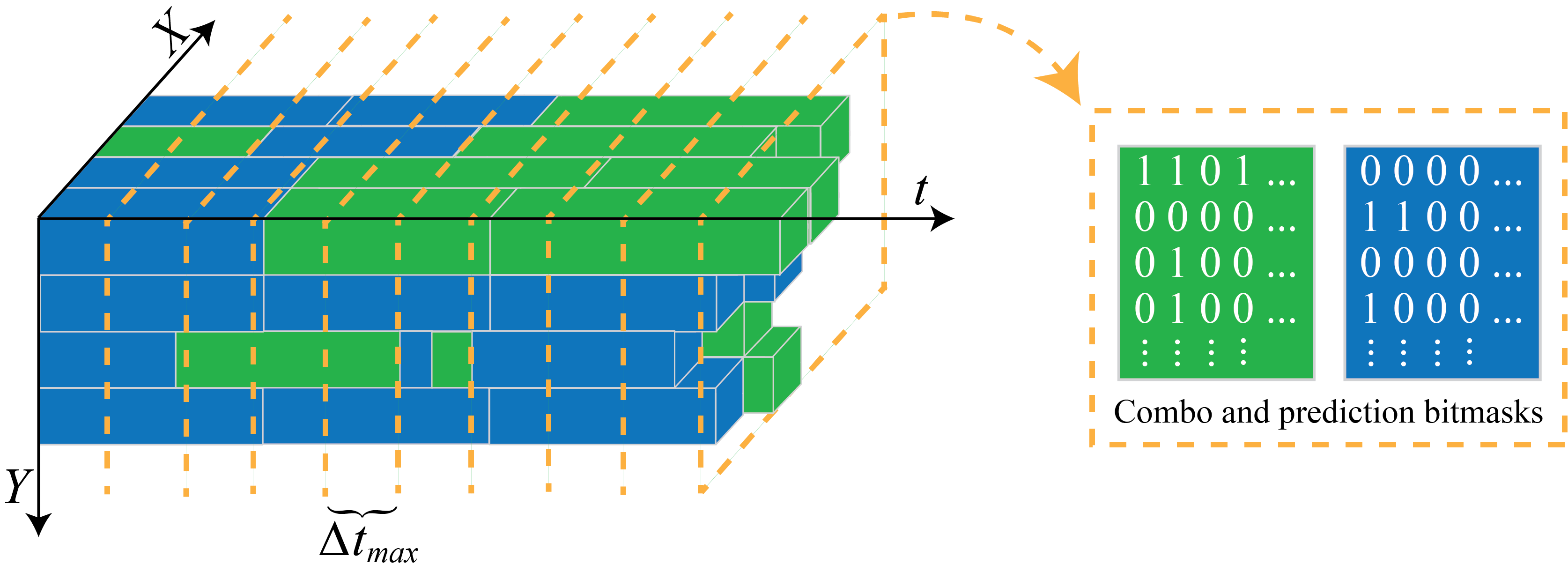

Event cameras are biologically-inspired sensors that upend the framed, synchronous nature of traditional cameras. Singh et al. proposed a novel sensor design wherein incident light values may be measured directly through continuous integration, with individual pixels’ light sensitivity being adjustable in real time, allowing for extremely high frame rate and high dynamic range video capture. Up to this point, there has been little research into compressing this event data, and even less that has investigated a robust method of lossy compression. This paper makes an inroad in this area, proposing a lossy model-based compression scheme with user-controlled quality levels and examine its compression efficiency and reconstructed image quality for several synthetic scenes. In our experiments, we observe space savings over the original event stream upwards of 95% with only minor losses in reconstructed image quality.

Authors:

Andrew C. Freeman and Ketan Mayer-Patel