Motion Segmentation and Tracking for Integrating Event Cameras

My more recent work on developing a robust event compression scheme with motion compensation has been accepted for publication in the ACM Multimedia Systems 2021 conference. This paper is application-oriented, and shows off some unique scenarios where an asynchronous camera will really shine. The conference will be held in September in Istanbul, Turkey. I’m hoping travel is permitted by that point!

The paper is now available on the ACM Digital Library here. The abstract is below.

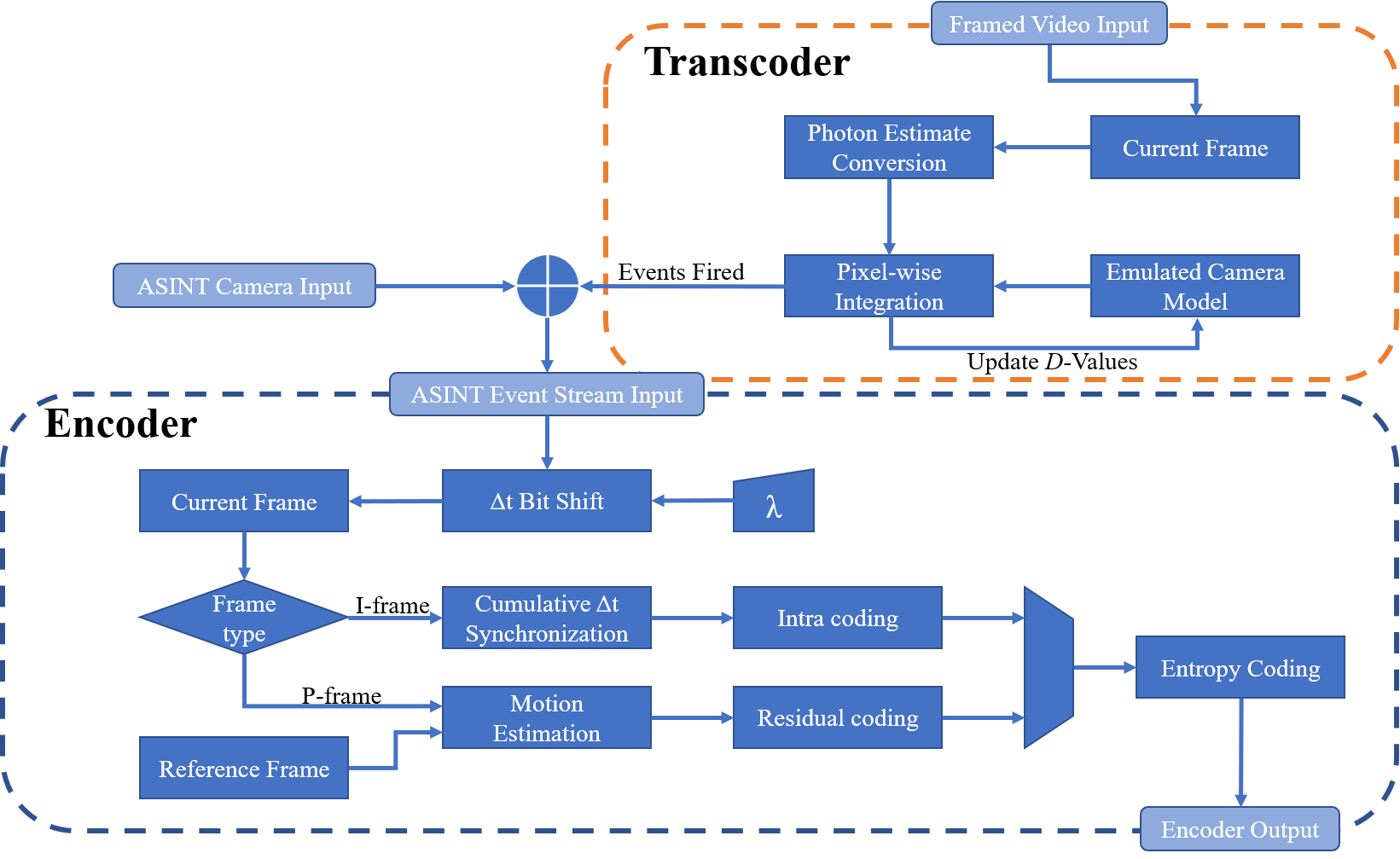

Integrating event cameras are asynchronous sensors wherein incident light values may be measured directly through continuous integration, with individual pixels' light sensitivity being adjustable in real time, allowing for extremely high frame rate and high dynamic range video capture. This paper builds on lessons learned with previous attempts to compress event data and presents a new scheme for event compression that has many analogues to traditional framed video compression techniques. We show how traditional video can be transcoded to an event-based representation, and describe the direct encoding of motion data in our event-based representation. Finally, we present experimental results proving how our simple scheme already approaches the state-of-the-art compression performance for slow-motion object tracking. This system introduces an application "in the loop" framework, where the application dynamically informs the camera how sensitive each pixel should be, based on the efficacy of the most recent data received.

Authors:

Andrew C. Freeman, Chris Burgess, and Ketan Mayer-Patel